简介

Firecrawl是一个用于网页爬取(Web Crawling)和抓取(Web Scraping)的工具或服务,旨在帮助开发者高效地从网站中提取结构化数据。它可能提供以下功能:

网页爬取(Crawling)

从指定的起始 URL 开始,递归地遍历和抓取多个页面。

支持设置爬取深度、并发请求数等参数。

网页抓取(Scraping)

从单个或多个页面中提取特定数据(如文本、链接、表格等)。

可能支持 CSS 选择器、XPath 或正则表达式来定位内容。

动态内容支持

通过无头浏览器(如 Puppeteer 或 Playwright)渲染 JavaScript 生成的动态内容。

可配置等待时间或条件,确保数据加载完成后再抓取。

API 接口

提供 RESTful API 或 SDK,方便集成到现有系统中。

可能支持批量任务调度和结果存储。

反爬绕过

自动处理常见的反爬机制(如 User-Agent 轮换、IP 代理、请求频率控制)。

github地址:https://github.com/mendableai/firecrawl

安装

克隆Firecrawl项目仓库

git clone https://github.com/mendableai/firecrawl.git

克隆完成后,会生成一个名为 firecrawl 的目录。请进入该目录,准备进行后续配置和部署步骤。

配置环境变量 (.env 文件)

找到项目目录下的 apps/api/.env.example 文件。将该文件复制并重命名为.env

cd firecrawl/apps/api

# 将 .env.example 复制一个并命名为 .env

cp .env.example .env

打开项目根目录下新生成的.env文件,确认和调整以下内容:

PORT=3002

HOST=0.0.0.0

USE_DB_AUTHENTICATION=false

NUM_WORKERS_PER_QUEUE=8

构建前的准备工作

修改firecrawl/apps/api/Dockerfile文件,内容如下,供参考:

主要修改了3点:

1.添加国内镜像源,加速构建

2.将rust:1-slim修改为了rust:1.88-slim,不修改会提示错误:lock file version 4 was found, but this version of Cargo does not understand this lock file, perhaps Cargo needs to be updated

3.将cargo build --release --locked修改为了cargo build --release

FROM node:22-slim AS base

ENV PNPM_HOME="/pnpm"

ENV PATH="$PNPM_HOME:$PATH"

RUN corepack enable

COPY . /app

WORKDIR /app

# 替换 Debian/Ubuntu 软件源为国内镜像

RUN sed -i 's/deb.debian.org/mirrors.aliyun.com/g' /etc/apt/sources.list.d/debian.sources && \

sed -i 's/security.debian.org/mirrors.aliyun.com/g' /etc/apt/sources.list.d/debian.sources

# 设置环境变量用于加速下载

ENV NODEJS_ORG_MIRROR=https://npmmirror.com/mirrors/node

ENV NVM_NODEJS_ORG_MIRROR=https://npmmirror.com/mirrors/node

# 设置 npm 国内镜像源

RUN npm config set registry https://registry.npmmirror.com

FROM base AS prod-deps

RUN --mount=type=cache,id=pnpm,target=/pnpm/store pnpm install --prod --frozen-lockfile

FROM base AS build

RUN --mount=type=cache,id=pnpm,target=/pnpm/store pnpm install && pnpm run build

# Install Go

FROM golang:1.24 AS go-base

COPY sharedLibs/go-html-to-md /app/sharedLibs/go-html-to-md

# 设置国内 Go 模块代理并构建

RUN go env -w GOPROXY=https://goproxy.cn,direct && \

cd /app/sharedLibs/go-html-to-md && \

go mod tidy && \

go build -o html-to-markdown.so -buildmode=c-shared html-to-markdown.go && \

chmod +x html-to-markdown.so

# Install Go dependencies and build parser lib

RUN cd /app/sharedLibs/go-html-to-md && \

go mod download && \

go build -o html-to-markdown.so -buildmode=c-shared html-to-markdown.go && \

chmod +x html-to-markdown.so

# Install Rust

FROM rust:1.88-slim AS rust-base

COPY sharedLibs/html-transformer /app/sharedLibs/html-transformer

COPY sharedLibs/pdf-parser /app/sharedLibs/pdf-parser

COPY sharedLibs/crawler /app/sharedLibs/crawler

# Install Rust dependencies and build transformer lib

RUN cd /app/sharedLibs/html-transformer && \

cargo build --release && \

chmod +x target/release/libhtml_transformer.so

# Install Rust dependencies and build PDF parser lib

RUN cd /app/sharedLibs/pdf-parser && \

cargo build --release && \

chmod +x target/release/libpdf_parser.so

# Install Rust dependencies and build crawler lib

RUN cd /app/sharedLibs/crawler && \

cargo build --release && \

chmod +x target/release/libcrawler.so

FROM base

COPY --from=build /app/dist /app/dist

COPY --from=prod-deps /app/node_modules /app/node_modules

COPY --from=go-base /app/sharedLibs/go-html-to-md/html-to-markdown.so /app/sharedLibs/go-html-to-md/html-to-markdown.so

COPY --from=rust-base /app/sharedLibs/html-transformer/target/release/libhtml_transformer.so /app/sharedLibs/html-transformer/target/release/libhtml_transformer.so

COPY --from=rust-base /app/sharedLibs/pdf-parser/target/release/libpdf_parser.so /app/sharedLibs/pdf-parser/target/release/libpdf_parser.so

COPY --from=rust-base /app/sharedLibs/crawler/target/release/libcrawler.so /app/sharedLibs/crawler/target/release/libcrawler.so

# Install git

RUN apt-get update && apt-get install -y git && rm -rf /var/lib/apt/lists/*

# Start the server by default, this can be overwritten at runtime

EXPOSE 8080

# Make sure the entrypoint script has the correct line endings

RUN sed -i 's/\r$//' /app/docker-entrypoint.sh

ENTRYPOINT "/app/docker-entrypoint.sh"

修改firecrawl/apps/playwright-service-ts/Dockerfile文件,添加国内镜像源,加速构建,我修改后的Dockerfile文件内容如下,供参考:

FROM node:18-slim

WORKDIR /usr/src/app

# 替换 Debian/Ubuntu 软件源为国内镜像

RUN sed -i 's/deb.debian.org/mirrors.aliyun.com/g' /etc/apt/sources.list.d/debian.sources && \

sed -i 's/security.debian.org/mirrors.aliyun.com/g' /etc/apt/sources.list.d/debian.sources

# 设置环境变量用于加速下载

ENV NODEJS_ORG_MIRROR=https://npmmirror.com/mirrors/node

ENV NVM_NODEJS_ORG_MIRROR=https://npmmirror.com/mirrors/node

# 设置 npm 国内镜像源

RUN npm config set registry https://registry.npmmirror.com

COPY package*.json ./

RUN npm install

COPY . .

ENV PLAYWRIGHT_BROWSERS_PATH=/tmp/.cache

# Install Playwright dependencies

RUN npx playwright install --with-deps

RUN npm run build

ARG PORT

ENV PORT=${PORT}

EXPOSE ${PORT}

CMD [ "npm", "start" ]

如果你的服务器连不上github,还需要修改firecrawl/apps/api/sharedLibs/html-transformer/Cargo.toml文件,将其中的https://github.com/mendableai/nodesig修改为https://kkgithub.com/mendableai/nodesig

使用 Docker Compose 构建并启动容器

docker compose build --no-cache

启动容器

docker compose up -d

停止容器

docker compose down

测试

启动后,访问http://[服务器IP地址]:3002/test,如果显示Hello, world!,则表示安装成功。

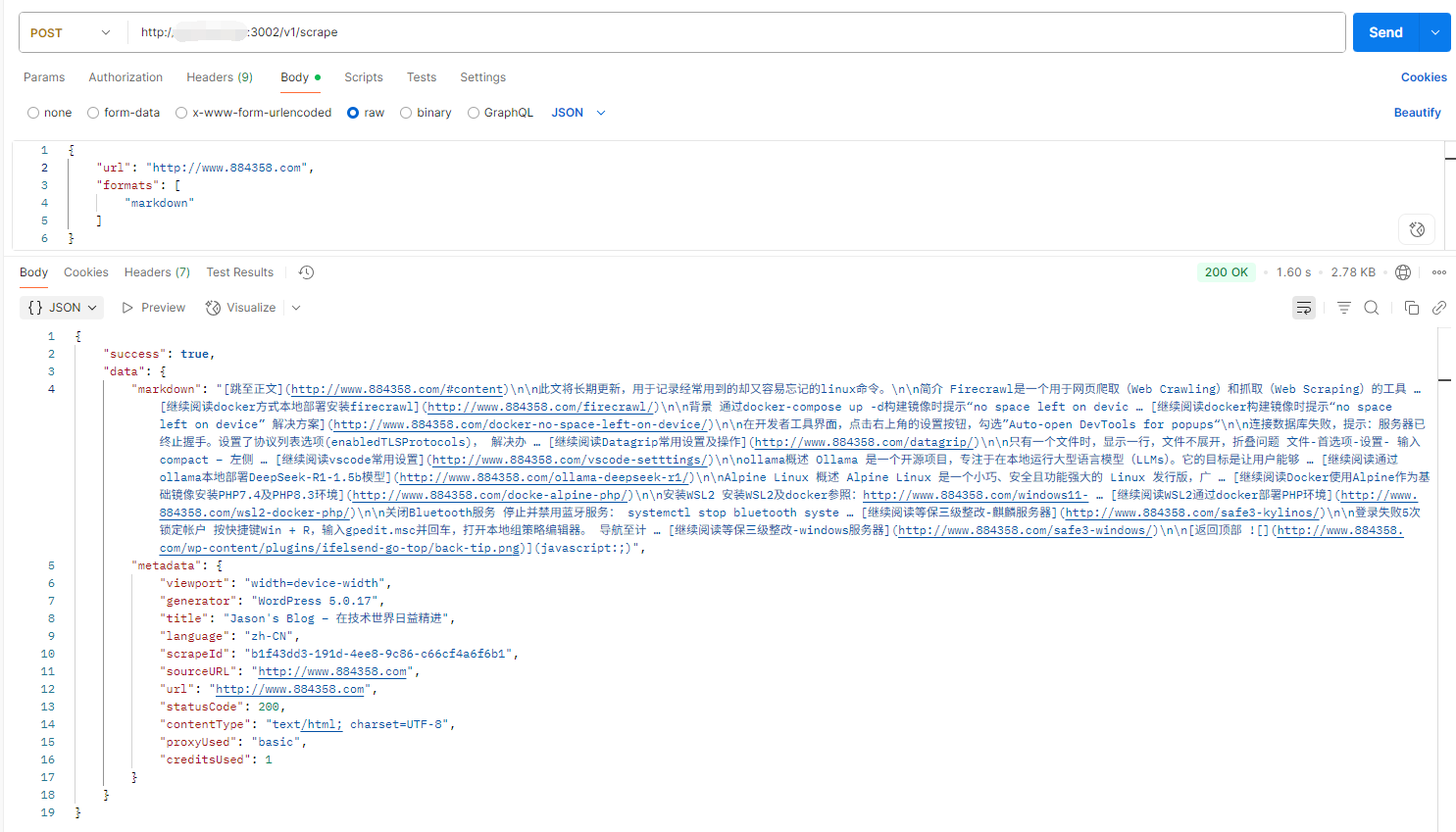

抓取单个页面

curl --request POST \

--url http://[服务器IP地址]:3002/v1/scrape \

--header 'Content-Type: application/json' \

--data '{

"url": "http://www.884358.com",

"formats": [

"markdown"

]

}'

如果要抓取js动态生成的内容,需要添加一个等待的参数:,例如:

{

"url": "https://www.example.com",

"waitFor": 1000, //等待一秒钟等页面渲染完毕

"formats": [

"markdown"

]

}

爬取网站

curl -X POST http://[服务器IP地址]:3002/v1/crawl \

-H 'Content-Type: application/json' \

-d '{

"url": "https://docs.firecrawl.dev",

"limit": 10,

"scrapeOptions": {

"formats": ["markdown", "html"]

}

}'

官方API文档

更多关于接口参数的说明可以参考官方API文档:

https://docs.firecrawl.dev/features/scrape

参考:

https://www.cnblogs.com/skystrive/p/18893148